Dive

What is Dive

Dive is an open-source MCP Host Desktop Application that integrates seamlessly with any LLMs that support function calling capabilities.

Use cases

Use cases for Dive include creating AI-driven chatbots, developing intelligent assistants, automating customer service responses, and integrating AI functionalities into existing applications.

How to use

Users can download and install Dive on their preferred operating system (Windows, MacOS, or Linux), then configure it to connect with their desired LLMs by entering API keys and setting custom instructions.

Key features

Key features include universal LLM support, cross-platform compatibility, model context protocol for integration, multi-language support, advanced API management, custom instructions for AI behavior, and an auto-update mechanism.

Where to use

Dive can be used in various fields such as software development, AI research, customer support automation, and any application requiring advanced AI interaction.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Overview

What is Dive

Dive is an open-source MCP Host Desktop Application that integrates seamlessly with any LLMs that support function calling capabilities.

Use cases

Use cases for Dive include creating AI-driven chatbots, developing intelligent assistants, automating customer service responses, and integrating AI functionalities into existing applications.

How to use

Users can download and install Dive on their preferred operating system (Windows, MacOS, or Linux), then configure it to connect with their desired LLMs by entering API keys and setting custom instructions.

Key features

Key features include universal LLM support, cross-platform compatibility, model context protocol for integration, multi-language support, advanced API management, custom instructions for AI behavior, and an auto-update mechanism.

Where to use

Dive can be used in various fields such as software development, AI research, customer support automation, and any application requiring advanced AI interaction.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Content

Dive AI Agent 🤿 🤖

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. ✨

Features 🎯

- 🌐 Universal LLM Support: Compatible with ChatGPT, Anthropic, Ollama and OpenAI-compatible models

- 💻 Cross-Platform: Available for Windows, MacOS, and Linux

- 🔄 Model Context Protocol: Enabling seamless MCP AI agent integration on both stdio and SSE mode

- 🌍 Multi-Language Support: Traditional Chinese, Simplified Chinese, English, Spanish, Japanese, Korean with more coming soon

- ⚙️ Advanced API Management: Multiple API keys and model switching support

- 💡 Custom Instructions: Personalized system prompts for tailored AI behavior

- 🔄 Auto-Update Mechanism: Automatically checks for and installs the latest application updates

Recent updates(2025/4/21)

- 🚀 Dive MCP Host v0.8.0: DiveHost rewritten in Python is now a separate project at dive-mcp-host

- ⚙️ Enhanced LLM Settings: Add, modify, delete LLM Provider API Keys and custom Model IDs

- 🔍 Model Validation: Validate or skip validation for models supporting Tool/Function calling

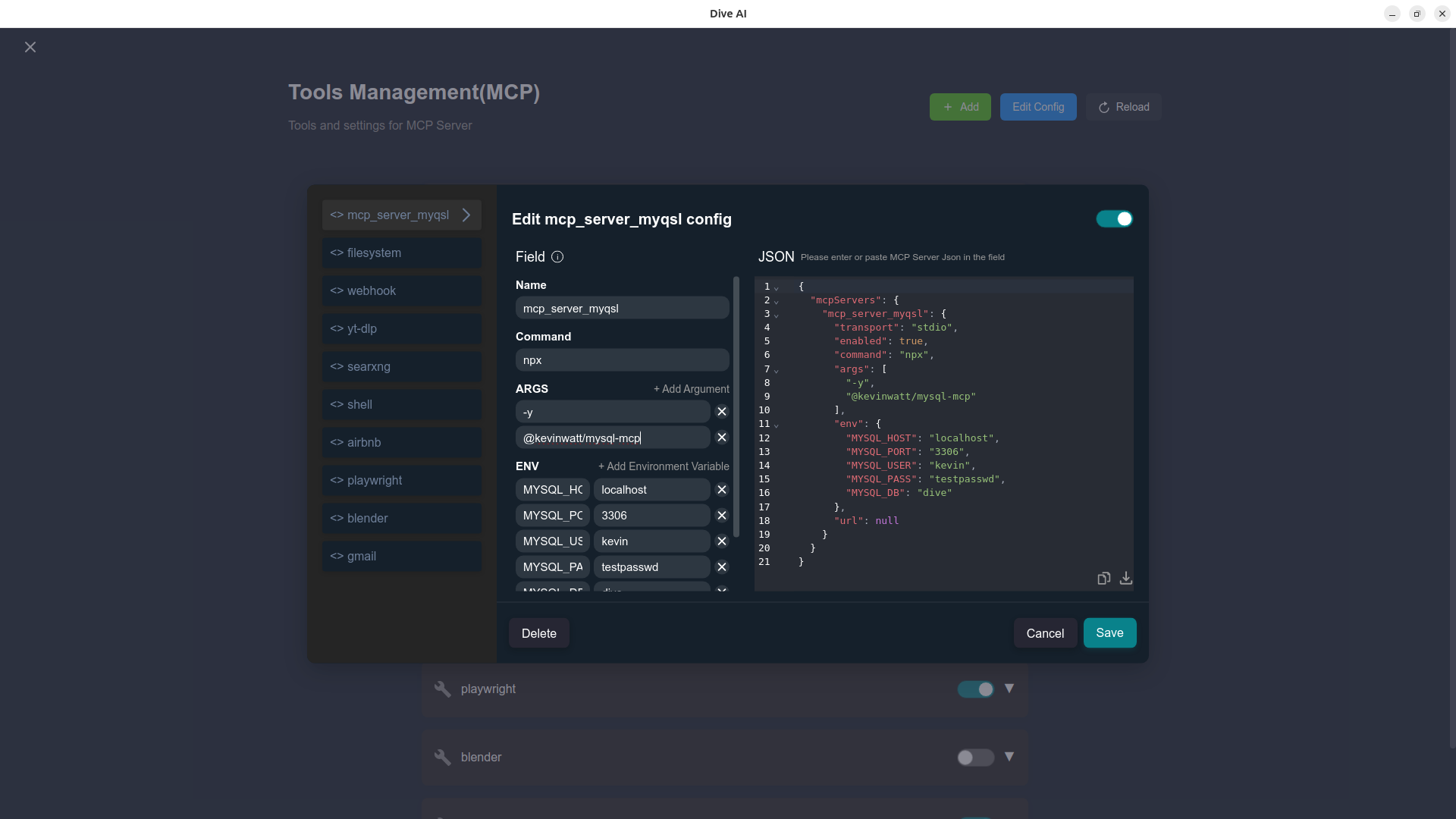

- 🔧 Improved MCP Configuration: Add, edit, and delete MCP tools directly from the UI

- 🌍 Japanese Translation: Added Japanese language support

- 🤖 Extended Model Support: Added Google Gemini and Mistral AI models integration

Important: Due to DiveHost migration from TypeScript to Python in v0.8.0, configuration files and chat history records will not be automatically upgraded. If you need to access your old data after upgrading, you can still downgrade to a previous version.

Download and Install ⬇️

Get the latest version of Dive:

For Windows users: 🪟

- Download the .exe version

- Python and Node.js environments are pre-installed

For MacOS users: 🍎

- Download the .dmg version

- You need to install Python and Node.js (with npx uvx) environments yourself

- Follow the installation prompts to complete setup

For Linux users: 🐧

- Download the .AppImage version

- You need to install Python and Node.js (with npx uvx) environments yourself

- For Ubuntu/Debian users:

- You may need to add

--no-sandboxparameter - Or modify system settings to allow sandbox

- Run

chmod +xto make the AppImage executable

- You may need to add

MCP Tips

While the system comes with a default echo MCP Server, your LLM can access more powerful tools through MCP. Here’s how to get started with two beginner-friendly tools: Fetch and Youtube-dl.

Quick Setup

Add this JSON configuration to your Dive MCP settings to enable both tools:

Using SSE Server for MCP

You can also connect to an external MCP server via SSE (Server-Sent Events). Add this configuration to your Dive MCP settings:

{

"mcpServers": {

"MCP_SERVER_NAME": {

"enabled": true,

"transport": "sse",

"url": "YOUR_SSE_SERVER_URL"

}

}

}Additional Setup for yt-dlp-mcp

yt-dlp-mcp requires the yt-dlp package. Install it based on your operating system:

Windows

winget install yt-dlp

MacOS

brew install yt-dlp

Linux

pip install yt-dlp

Build 🛠️

See BUILD.md for more details.

Connect With Us 🌐

- 💬 Join our Discord

- 🐦 Follow us on Twitter/X Reddit Thread

- ⭐ Star us on GitHub

- 🐛 Report issues on our Issue Tracker

DevTools Supporting MCP

The following are the main code editors that support the Model Context Protocol. Click the link to visit the official website for more information.