- Explore MCP Servers

- HyperChat

Hyperchat

What is Hyperchat

HyperChat is an open-source chat client designed for openness, utilizing APIs from various LLMs to provide an optimal chat experience and productivity tools through the MCP protocol.

Use cases

Use cases for HyperChat include team collaboration, automated task management, educational tutoring, and real-time customer service interactions.

How to use

To use HyperChat, you can run it via command line with the command npx -y @dadigua/hyper-chat, access it through a web browser at http://localhost:16100/123456/, or use Docker with the command docker pull dadigua/hyperchat-mini:latest.

Key features

Key features of HyperChat include support for multiple operating systems (Windows, MacOS, Linux), command line operation, Docker compatibility, WebDAV synchronization, MCP extensions, dark mode, resource and prompt support, multi-conversation chatting, scheduled tasks, and model comparison in chat.

Where to use

HyperChat can be used in various fields including software development, customer support, education, and any area that requires efficient communication and productivity tools.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Overview

What is Hyperchat

HyperChat is an open-source chat client designed for openness, utilizing APIs from various LLMs to provide an optimal chat experience and productivity tools through the MCP protocol.

Use cases

Use cases for HyperChat include team collaboration, automated task management, educational tutoring, and real-time customer service interactions.

How to use

To use HyperChat, you can run it via command line with the command npx -y @dadigua/hyper-chat, access it through a web browser at http://localhost:16100/123456/, or use Docker with the command docker pull dadigua/hyperchat-mini:latest.

Key features

Key features of HyperChat include support for multiple operating systems (Windows, MacOS, Linux), command line operation, Docker compatibility, WebDAV synchronization, MCP extensions, dark mode, resource and prompt support, multi-conversation chatting, scheduled tasks, and model comparison in chat.

Where to use

HyperChat can be used in various fields including software development, customer support, education, and any area that requires efficient communication and productivity tools.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Content

Introduction

HyperChat is an open-source chat client that supports MCP and can use various LLM APIs to achieve the best chat experience, as well as implement productivity tools.

- Supports OpenAI-style LLMs,

OpenAI,Claude,Claude(OpenAI),Qwen,Deepseek,GLM,Ollama,xAI,Gemini. - Fully supports MCP.

DEMO

Features:

- [x] 🪟Windows + 🍏MacOS + Linux

- [x] Command-line run,

npx -y @dadigua/hyper-chat, default port 16100, password 123456, Web access http://localhost:16100/123456/ - [x] Docker

- Command-line version

docker pull dadigua/hyperchat-mini:latest - Ubuntu desktop + Chrome + BrowserUse version (coming soon)

- Command-line version

- [x]

WebDAVsupports incremental synchronization, fastest synchronization through hash. - [x]

HyperPromptprompt syntax supports variables (text + js code variables), basic syntax checking + Hover real-time preview. - [x]

MCPextensions - [x] Supports dark mode🌙

- [x] Resources, Prompts, Tools support

- [x] Supports English and Chinese

- [x] Supports

Artifacts,SVG,HTML,Mermaidrendering - [x] Supports defining Agents, allowing preset prompts and selecting permitted MCP

- [x] Supports scheduled tasks, specifying Agents to complete tasks on schedule, and viewing task completion status.

- [x] Supports

KaTeX, displays mathematical formulas, code rendering increases highlight and quick copy - [x] Added

RAG, based on MCP knowledge base - [x] Introduced the ChatSpace concept, supports simultaneous chats across multiple dialogues

- [x] Supports chat model selection comparison

TODO:

- Implement multi-Agent interactive system.

LLM

| LLM | Usability | Remarks |

|---|---|---|

| claude | ⭐⭐⭐⭐⭐⭐ | No explanation |

| openai | ⭐⭐⭐⭐⭐ | Also perfectly supports multi-step function calls (gpt-4o-mini can also) |

| gemini flash 2.5 | ⭐⭐⭐⭐⭐ | Very easy to use |

| qwen | ⭐⭐⭐⭐ | Quite useful |

| doubao | ⭐⭐⭐ | Feels okay to use |

| deepseek | ⭐⭐⭐⭐ | Recently improved |

Usage

-

- Configure APIKEY, ensure your LLM service is compatible with OpenAI style.

-

- Ensure you have

uv + nodejsetc. installed in your system.

- Ensure you have

Install using the command line, or check the official GitHub tutorial uv

# MacOS brew install uv # Windows winget install --id=astral-sh.uv -e

Install using the command line, or go to the official website to download, official site nodejs

# MacOS brew install node # Windows winget install OpenJS.NodeJS.LTS

Development

cd electron && npm install cd web && npm install npm install npm run dev

HyperChat User Communication

Super input, supports variables (text + js code variables), basic syntax checking + Hover real-time preview

Chat supports model selection comparison

Supports clicking tool names for direct debugging

MCP calling Tool prompts + dynamically modifying LLM calling Tool parameters

Supports @ quick input + calling Agent

Supports rendering Artifacts, SVG, HTML, Mermaid

Supports MCP selection + selecting partial Tool

You can access from anywhere and any device via the web, and set a password

Calls terminal MCP to automatically analyze asar files + helps me unzip

Calls terminal, compiles and upgrades nginx

Gaode Map MCP

One-click webpage creation, and publish to (cloudflare)

Calls Google search, asks what the TGA Game of the Year is

What are the limited-time free games? Please visit the website to call the tool

Opens a webpage for you, analyzes the results, and writes to a file

Through web tools + command line tools, opens GitHub README to learn + GIT clone + set up development environment

Multi-chat Workspace + Night Mode

Scheduled task list + schedules messages to be sent to Agent to complete tasks

Install MCP from third parties (supports any MCP)

H5 interface

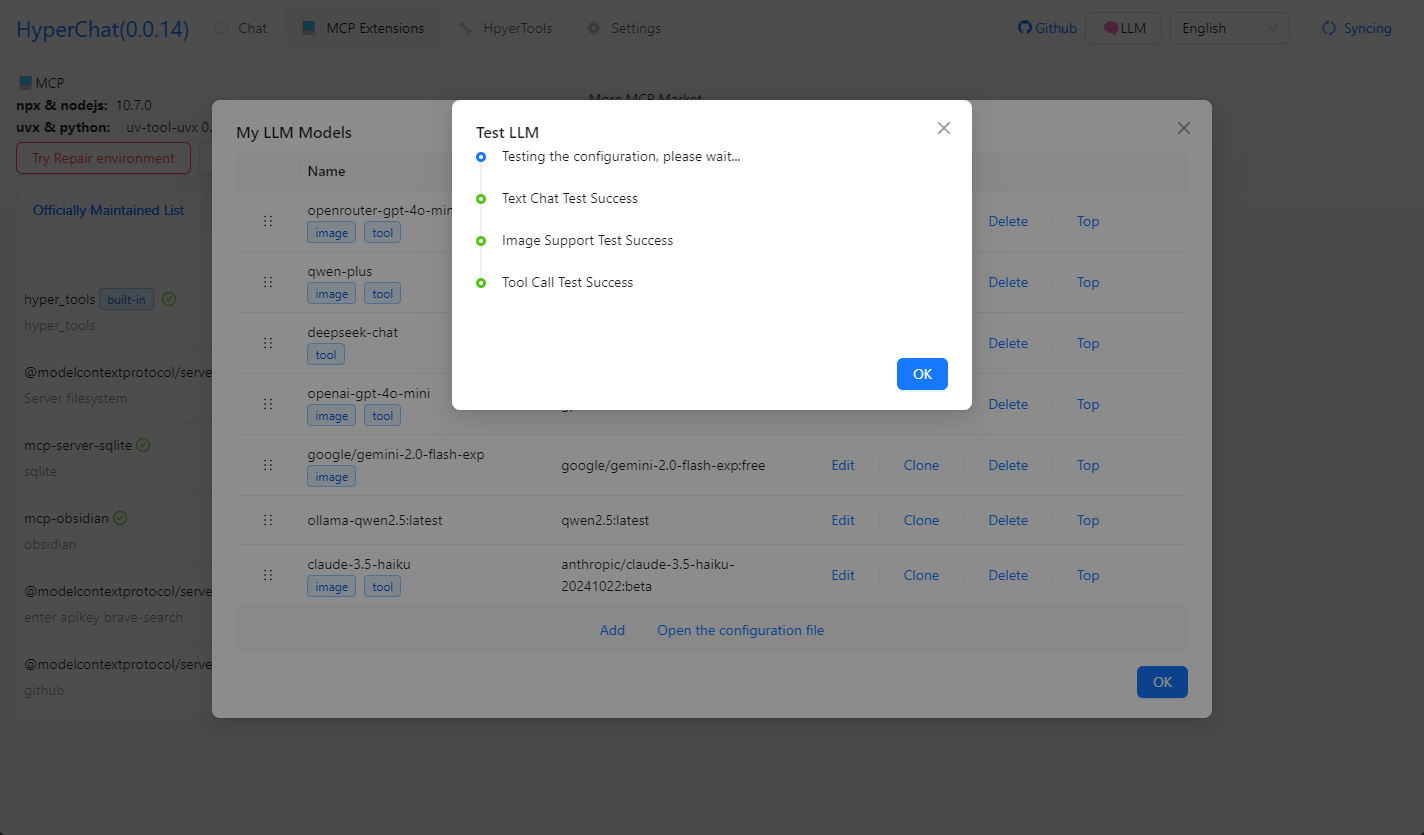

Testing model capabilities

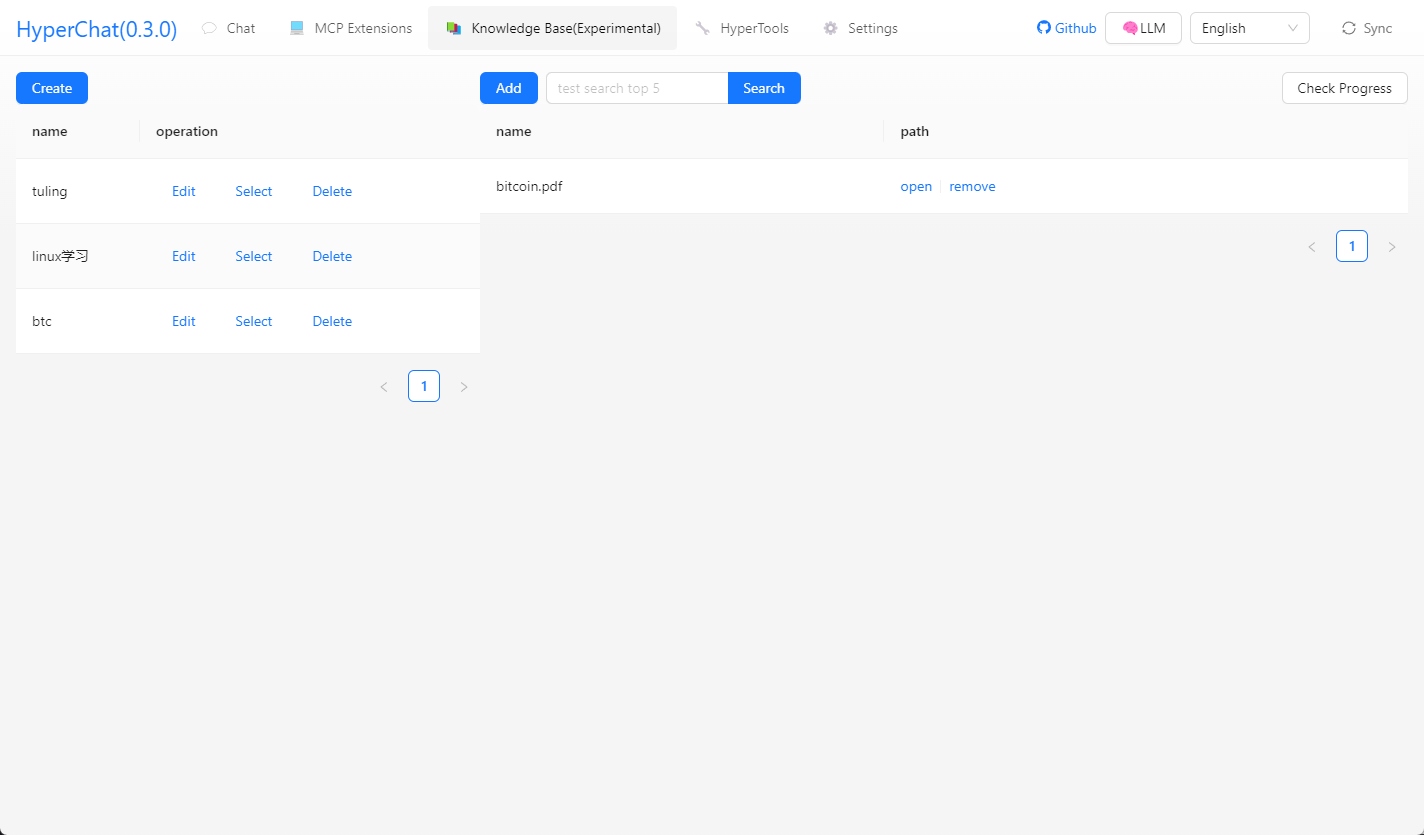

Knowledge base

Disclaimer

- This project is for learning and communication purposes only. If you use this project for any operations, such as crawling behavior, it has nothing to do with the developers of this project.

DevTools Supporting MCP

The following are the main code editors that support the Model Context Protocol. Click the link to visit the official website for more information.