- Explore MCP Servers

- TySVA

TySVA (Learn TypeScript)

What is TySVA (Learn TypeScript)

TySVA, or TypeScript Voice Assistant, is an AI-driven tool designed to facilitate the learning of TypeScript through interactive engagement, utilizing voice and text inputs.

Use cases

TySVA is ideal for users seeking assistance in understanding TypeScript concepts, troubleshooting coding issues, or enhancing their programming skills through conversational learning. It can be particularly useful for beginners and developers wanting to quickly access information from TypeScript documentation.

How to use

To use TySVA, first clone the repository and set up the environment. You can choose between a Docker setup or a source code installation. After configuring necessary API keys and launching the application, you can access it via a web interface, which allows for both voice and text interactions.

Key features

Key features of TySVA include voice recognition for input, transcription capabilities, vector search for retrieving documentation, integration with multiple databases and APIs for enhanced response accuracy, and a conversational agent workflow to provide contextually relevant answers.

Where to use

TySVA can be used locally on your machine after following the installation steps. It is particularly suited for educational environments, personal learning setups, and programming workshops where TypeScript knowledge is required.

Overview

What is TySVA (Learn TypeScript)

TySVA, or TypeScript Voice Assistant, is an AI-driven tool designed to facilitate the learning of TypeScript through interactive engagement, utilizing voice and text inputs.

Use cases

TySVA is ideal for users seeking assistance in understanding TypeScript concepts, troubleshooting coding issues, or enhancing their programming skills through conversational learning. It can be particularly useful for beginners and developers wanting to quickly access information from TypeScript documentation.

How to use

To use TySVA, first clone the repository and set up the environment. You can choose between a Docker setup or a source code installation. After configuring necessary API keys and launching the application, you can access it via a web interface, which allows for both voice and text interactions.

Key features

Key features of TySVA include voice recognition for input, transcription capabilities, vector search for retrieving documentation, integration with multiple databases and APIs for enhanced response accuracy, and a conversational agent workflow to provide contextually relevant answers.

Where to use

TySVA can be used locally on your machine after following the installation steps. It is particularly suited for educational environments, personal learning setups, and programming workshops where TypeScript knowledge is required.

Content

TySVA - TypeScript Voice Assistant🪄

Learn TypeScript chatting effortlessly with AI

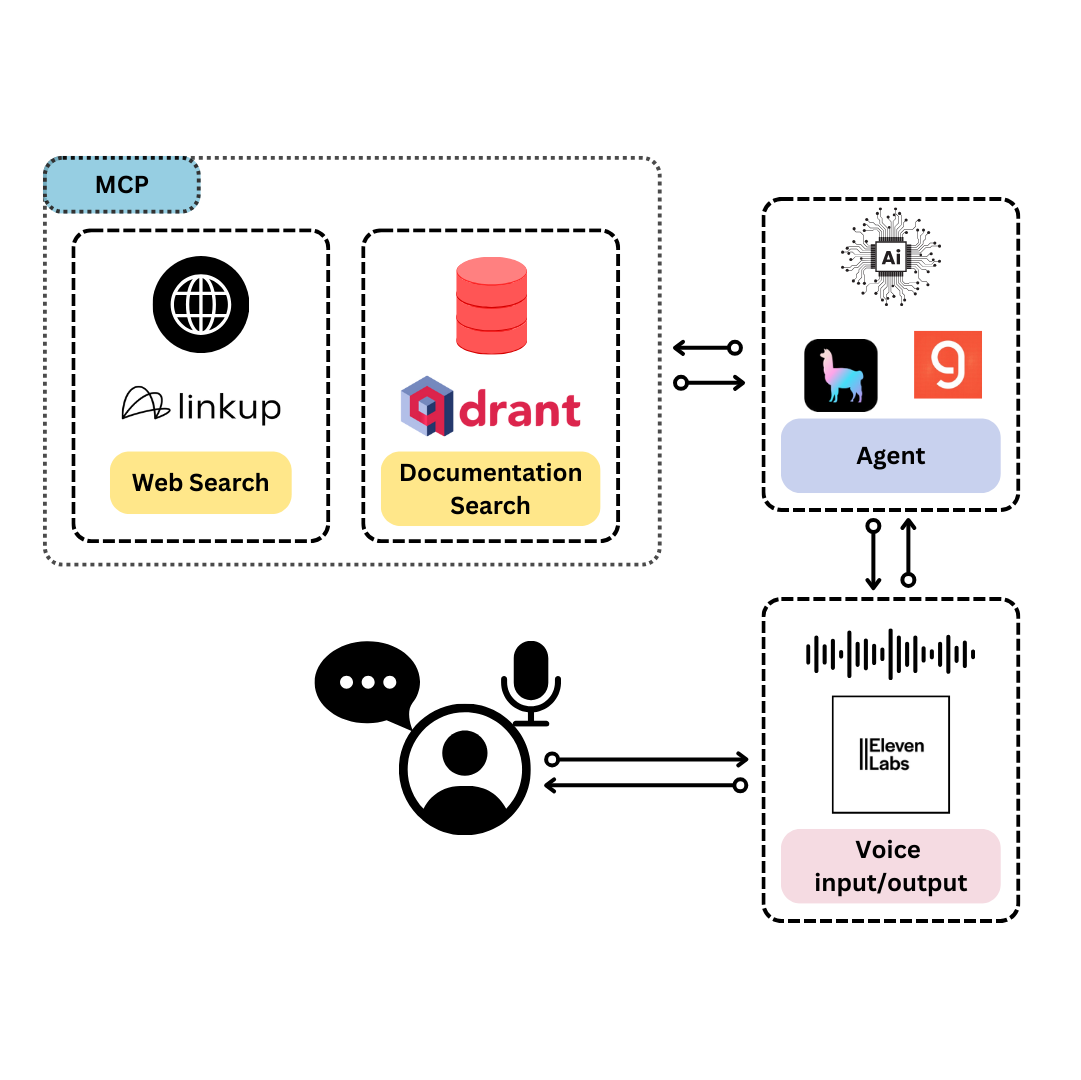

TySVA is aimed at creating a learning space for you to get to know more about TypeScript, leveraging:

- Qdrant local database, with the full documentation for TypeScript

- LinkUp, for web deep search

- MCP servers, for vector search and web search automation

- ElevenLabs, for voice input transcription and voice output generation

- LlamaIndex, for agent workflows

It supports voice input/output, as well as textual input/output.

Install and launch🚀

The first step, common to both the Docker and the source code setup approaches, is to clone the repository and access it:

git clone https://github.com/AstraBert/TySVA.git

cd TySVA

Once there, you can choose one of the two following approaches:

Docker (recommended)🐋

Required: Docker and docker compose

- Add the

groq_api_key,elevenlabs_api_keyandlinkup_api_keyvariable in the.env.examplefile and modify the name of the file to.env. Get these keys:

mv .env.example .env

- Launch the Docker application:

# If you are on Linux/macOS

bash start_services.sh

# If you are on Windows

.\start_services.ps1

- Or do it manually:

docker compose up vector_db -d docker compose up mcp -d docker compose up app -d

You will see the application running on http://localhost:7999/app and you will be able to use it. Depending on your connection and on your hardware, the set up might take some time (up to 15 mins to set up) - but this is only for the first time your run it!

Source code🗎

Required: Docker, docker compose and conda

- Add the

groq_api_key,elevenlabs_api_keyandlinkup_api_keyvariable in the.env.examplefile and modify the name of the file to.env. Get these keys:

mv .env.example scripts/.env

- Set up the conda environment and the vector database using the dedicated script:

# For MacOs/Linux users

bash setup.sh

# For Windows users

.\setup.ps1

- Or you can do it manually, if you prefer:

docker compose up vector_db -d

conda env create -f environment.yml

- Now you can launch the script to load TypeScript documentation to the vector database:

conda activate typescript-assistant-voice python3 scripts/data.py

- And, when you’re done, launch the MCP server:

conda activate typescript-assistant-voice

cd scripts

python3 server.py

- Now open another terminal, and run the application:

uvicorn app:app --host 0.0.0.0 --port 7999

You will see the application running on http://localhost:7999/app and you will be able to use it.

Workflow

The workflow is very simple:

- When you submit a request, if is audio, it gets transcribed and then submitted to the agent workflow as a starting prompt, whereas if it is textual it will be submitted directly to the agent workflow

- The agent workflow can solve the TypeScript answer by retrieving documents from the vector database or by searching the web. There is also the possibility of a direct response (no tool use) if the answer is simple. All the tools are available through MCP.

- Once the agent is done, the agentic process and the output get summarized, and the summaries are turned into voice output. The voice output is returned along with the textual output by the agent.

Contributing

Contributions are always welcome! Follow the contributions guidelines reported here.

License and rights of usage

The software is provided under MIT license.