- Explore MCP Servers

- gnome-shell-extension-ai-assistant

Gnome Shell Extension Ai Assistant

What is Gnome Shell Extension Ai Assistant

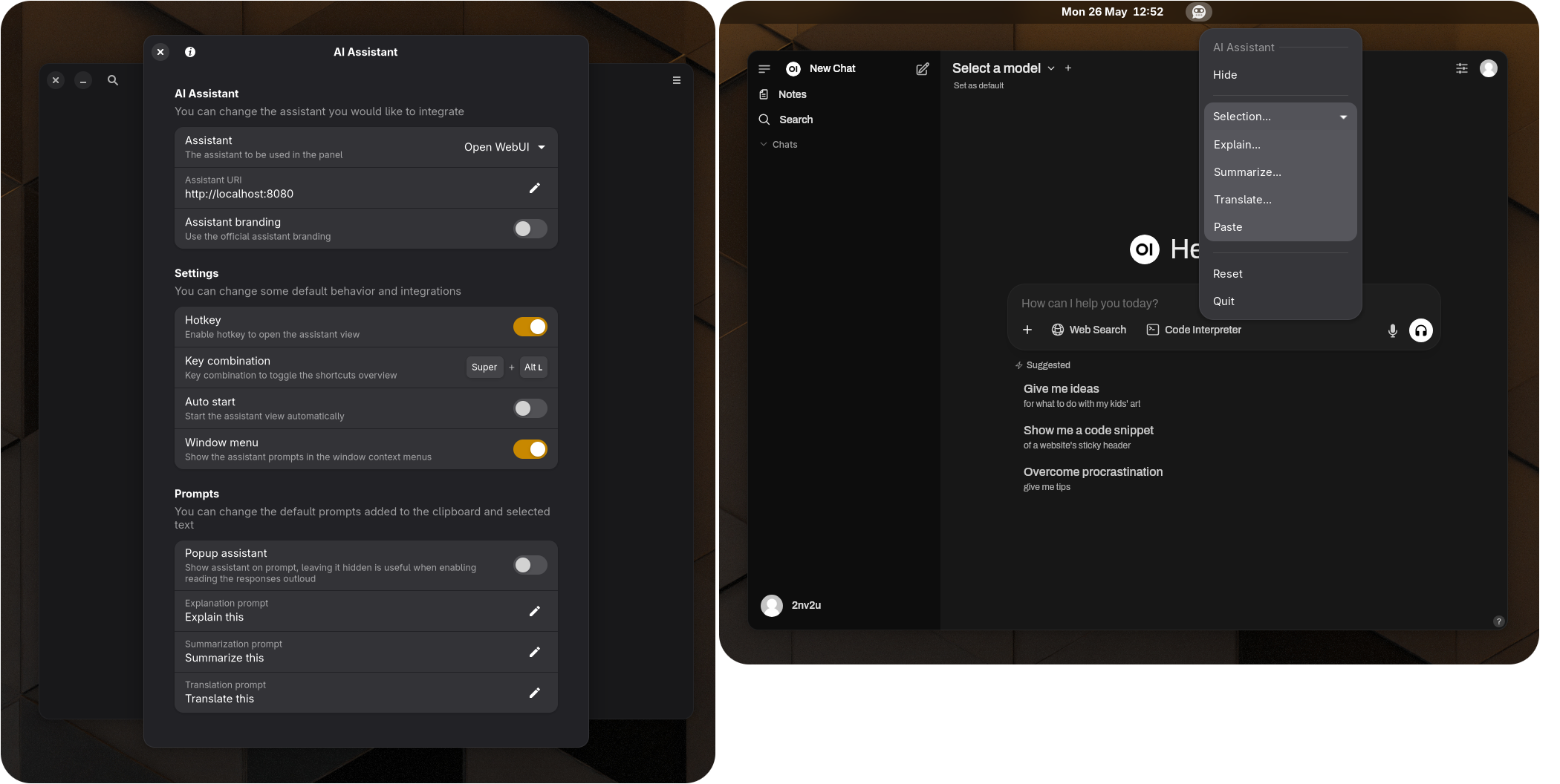

The gnome-shell-extension-ai-assistant is an integration of artificial intelligence LLM chat within the Gnome Shell environment, providing a way to interact with AI directly from the desktop.

Use cases

Use cases include quickly querying information by selecting text, generating content based on prompts, and controlling the shell environment through AI, enhancing user efficiency and interaction.

How to use

To use the gnome-shell-extension-ai-assistant, you can install it from the Gnome extensions website or manually clone the repository and run the installation script. After installation, restart the shell or log out and back in to activate the extension.

Key features

Key features include a background webview with a panel button, the ability to send selected text to the LLM without copying, window and panel context prompt options, and a roadmap for future enhancements such as screenshot support and additional LLM integrations.

Where to use

The gnome-shell-extension-ai-assistant can be used in various fields where desktop productivity and AI interaction are beneficial, such as software development, content creation, and research.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Overview

What is Gnome Shell Extension Ai Assistant

The gnome-shell-extension-ai-assistant is an integration of artificial intelligence LLM chat within the Gnome Shell environment, providing a way to interact with AI directly from the desktop.

Use cases

Use cases include quickly querying information by selecting text, generating content based on prompts, and controlling the shell environment through AI, enhancing user efficiency and interaction.

How to use

To use the gnome-shell-extension-ai-assistant, you can install it from the Gnome extensions website or manually clone the repository and run the installation script. After installation, restart the shell or log out and back in to activate the extension.

Key features

Key features include a background webview with a panel button, the ability to send selected text to the LLM without copying, window and panel context prompt options, and a roadmap for future enhancements such as screenshot support and additional LLM integrations.

Where to use

The gnome-shell-extension-ai-assistant can be used in various fields where desktop productivity and AI interaction are beneficial, such as software development, content creation, and research.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Content

Gnome Shell - AI Assistant

Gnome Shell - AI Assistant

Artificial intelligence LLM chat integration and MCP server exposing the shell environment.

Featuring:

- Background webview with tootle panel button

- Selecting text allows you to send it to the LLM (No need to copy furst)

- Window context prompt options

- Panel context prompt options

Roadmap:

- Add screenshot support (paste screenshot of windows and screen to be analyzed)

- Add more LLM’s

- Add MCP server for controlling the shell and provide more context for the desktop

Important Notice: Be cautious what you share with the online AI chat bots, consider running your LLM’s locally using Open WebUI (https://openwebui.com/) and Ollama (https://ollama.com/).

Installation from gnome extension website

https://extensions.gnome.org/extension/8224/ai-assistant/

Manual installation

git clone https://github.com/2nv2u/gnome-shell-extension-ai-assistant.git./gnome-shell-extension-ai-assistant/install.sh- Restart shell or log out & log in

Debugging during development

clear && export G_MESSAGES_DEBUG=all && dbus-run-session -- gnome-shell --nested --wayland | grep ai-assistant

Dev Tools Supporting MCP

The following are the main code editors that support the Model Context Protocol. Click the link to visit the official website for more information.