- Explore MCP Servers

- langchain-mcp-adapters

Langchain MCP

What is Langchain MCP

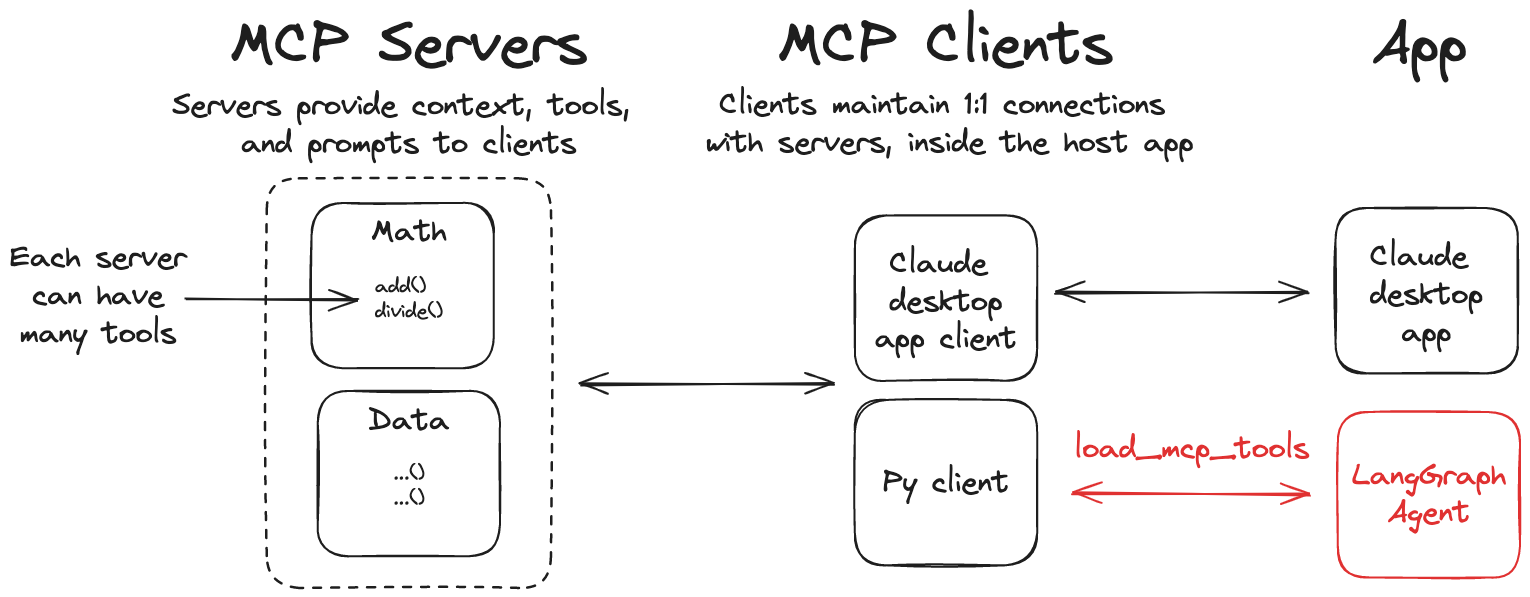

langchain-mcp-adapters is a library that provides a lightweight wrapper to make tools compatible with the Anthropic Model Context Protocol (MCP) for use with LangChain and LangGraph.

Use cases

Use cases include building custom servers for mathematical operations, integrating various MCP tools into applications, and enhancing AI agents with additional functionalities.

How to use

To use langchain-mcp-adapters, install it via pip, set up an MCP server with defined tools, and then create a client to connect to the server and utilize the tools through LangGraph agents.

Key features

Key features include the ability to convert MCP tools into LangChain tools and a client implementation that allows connection to multiple MCP servers and loading tools from them.

Where to use

langchain-mcp-adapters can be used in various fields such as artificial intelligence, data processing, and any application that requires integration of MCP tools with LangChain or LangGraph.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Overview

What is Langchain MCP

langchain-mcp-adapters is a library that provides a lightweight wrapper to make tools compatible with the Anthropic Model Context Protocol (MCP) for use with LangChain and LangGraph.

Use cases

Use cases include building custom servers for mathematical operations, integrating various MCP tools into applications, and enhancing AI agents with additional functionalities.

How to use

To use langchain-mcp-adapters, install it via pip, set up an MCP server with defined tools, and then create a client to connect to the server and utilize the tools through LangGraph agents.

Key features

Key features include the ability to convert MCP tools into LangChain tools and a client implementation that allows connection to multiple MCP servers and loading tools from them.

Where to use

langchain-mcp-adapters can be used in various fields such as artificial intelligence, data processing, and any application that requires integration of MCP tools with LangChain or LangGraph.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Content

LangChain MCP Adapters

This library provides a lightweight wrapper that makes Anthropic Model Context Protocol (MCP) tools compatible with LangChain and LangGraph.

Features

- 🛠️ Convert MCP tools into LangChain tools that can be used with LangGraph agents

- 📦 A client implementation that allows you to connect to multiple MCP servers and load tools from them

Installation

pip install langchain-mcp-adapters

Quickstart

Here is a simple example of using the MCP tools with a LangGraph agent.

pip install langchain-mcp-adapters langgraph "langchain[openai]"

export OPENAI_API_KEY=<your_api_key>

Server

First, let’s create an MCP server that can add and multiply numbers.

# math_server.py

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Math")

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

@mcp.tool()

def multiply(a: int, b: int) -> int:

"""Multiply two numbers"""

return a * b

if __name__ == "__main__":

mcp.run(transport="stdio")

Client

# Create server parameters for stdio connection

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from langchain_mcp_adapters.tools import load_mcp_tools

from langgraph.prebuilt import create_react_agent

server_params = StdioServerParameters(

command="python",

# Make sure to update to the full absolute path to your math_server.py file

args=["/path/to/math_server.py"],

)

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

# Initialize the connection

await session.initialize()

# Get tools

tools = await load_mcp_tools(session)

# Create and run the agent

agent = create_react_agent("openai:gpt-4.1", tools)

agent_response = await agent.ainvoke({"messages": "what's (3 + 5) x 12?"})

Multiple MCP Servers

The library also allows you to connect to multiple MCP servers and load tools from them:

Server

# math_server.py

...

# weather_server.py

from typing import List

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Weather")

@mcp.tool()

async def get_weather(location: str) -> str:

"""Get weather for location."""

return "It's always sunny in New York"

if __name__ == "__main__":

mcp.run(transport="streamable-http")

python weather_server.py

Client

from langchain_mcp_adapters.client import MultiServerMCPClient

from langgraph.prebuilt import create_react_agent

client = MultiServerMCPClient(

{

"math": {

"command": "python",

# Make sure to update to the full absolute path to your math_server.py file

"args": ["/path/to/math_server.py"],

"transport": "stdio",

},

"weather": {

# make sure you start your weather server on port 8000

"url": "http://localhost:8000/mcp/",

"transport": "streamable_http",

}

}

)

tools = await client.get_tools()

agent = create_react_agent("openai:gpt-4.1", tools)

math_response = await agent.ainvoke({"messages": "what's (3 + 5) x 12?"})

weather_response = await agent.ainvoke({"messages": "what is the weather in nyc?"})

[!note]

Example above will start a new MCPClientSessionfor each tool invocation. If you would like to explicitly start a session for a given server, you can do:from langchain_mcp_adapters.tools import load_mcp_tools client = MultiServerMCPClient({...}) async with client.session("math") as session: tools = await load_mcp_tools(session)

Streamable HTTP

MCP now supports streamable HTTP transport.

To start an example streamable HTTP server, run the following:

cd examples/servers/streamable-http-stateless/

uv run mcp-simple-streamablehttp-stateless --port 3000

Alternatively, you can use FastMCP directly (as in the examples above).

To use it with Python MCP SDK streamablehttp_client:

# Use server from examples/servers/streamable-http-stateless/

from mcp import ClientSession

from mcp.client.streamable_http import streamablehttp_client

from langgraph.prebuilt import create_react_agent

from langchain_mcp_adapters.tools import load_mcp_tools

async with streamablehttp_client("http://localhost:3000/mcp/") as (read, write, _):

async with ClientSession(read, write) as session:

# Initialize the connection

await session.initialize()

# Get tools

tools = await load_mcp_tools(session)

agent = create_react_agent("openai:gpt-4.1", tools)

math_response = await agent.ainvoke({"messages": "what's (3 + 5) x 12?"})

Use it with MultiServerMCPClient:

# Use server from examples/servers/streamable-http-stateless/

from langchain_mcp_adapters.client import MultiServerMCPClient

from langgraph.prebuilt import create_react_agent

client = MultiServerMCPClient(

{

"math": {

"transport": "streamable_http",

"url": "http://localhost:3000/mcp/"

},

}

)

tools = await client.get_tools()

agent = create_react_agent("openai:gpt-4.1", tools)

math_response = await agent.ainvoke({"messages": "what's (3 + 5) x 12?"})

Passing runtime headers

When connecting to MCP servers, you can include custom headers (e.g., for authentication or tracing) using the headers field in the connection configuration. This is supported for the following transports:

ssestreamable_http

Example: passing headers with MultiServerMCPClient

from langchain_mcp_adapters.client import MultiServerMCPClient

from langgraph.prebuilt import create_react_agent

client = MultiServerMCPClient(

{

"weather": {

"transport": "streamable_http",

"url": "http://localhost:8000/mcp",

"headers": {

"Authorization": "Bearer YOUR_TOKEN",

"X-Custom-Header": "custom-value"

},

}

}

)

tools = await client.get_tools()

agent = create_react_agent("openai:gpt-4.1", tools)

response = await agent.ainvoke({"messages": "what is the weather in nyc?"})

Only

sseandstreamable_httptransports support runtime headers. These headers are passed with every HTTP request to the MCP server.

Using with LangGraph StateGraph

from langchain_mcp_adapters.client import MultiServerMCPClient

from langgraph.graph import StateGraph, MessagesState, START

from langgraph.prebuilt import ToolNode, tools_condition

from langchain.chat_models import init_chat_model

model = init_chat_model("openai:gpt-4.1")

client = MultiServerMCPClient(

{

"math": {

"command": "python",

# Make sure to update to the full absolute path to your math_server.py file

"args": ["./examples/math_server.py"],

"transport": "stdio",

},

"weather": {

# make sure you start your weather server on port 8000

"url": "http://localhost:8000/mcp/",

"transport": "streamable_http",

}

}

)

tools = await client.get_tools()

def call_model(state: MessagesState):

response = model.bind_tools(tools).invoke(state["messages"])

return {"messages": response}

builder = StateGraph(MessagesState)

builder.add_node(call_model)

builder.add_node(ToolNode(tools))

builder.add_edge(START, "call_model")

builder.add_conditional_edges(

"call_model",

tools_condition,

)

builder.add_edge("tools", "call_model")

graph = builder.compile()

math_response = await graph.ainvoke({"messages": "what's (3 + 5) x 12?"})

weather_response = await graph.ainvoke({"messages": "what is the weather in nyc?"})

Using with LangGraph API Server

[!TIP]

Check out this guide on getting started with LangGraph API server.

If you want to run a LangGraph agent that uses MCP tools in a LangGraph API server, you can use the following setup:

# graph.py

from contextlib import asynccontextmanager

from langchain_mcp_adapters.client import MultiServerMCPClient

from langgraph.prebuilt import create_react_agent

async def make_graph():

client = MultiServerMCPClient(

{

"math": {

"command": "python",

# Make sure to update to the full absolute path to your math_server.py file

"args": ["/path/to/math_server.py"],

"transport": "stdio",

},

"weather": {

# make sure you start your weather server on port 8000

"url": "http://localhost:8000/mcp/",

"transport": "streamable_http",

}

}

)

tools = await client.get_tools()

agent = create_react_agent("openai:gpt-4.1", tools)

return agent

In your langgraph.json make sure to specify make_graph as your graph entrypoint:

{

"dependencies": [

"."

],

"graphs": {

"agent": "./graph.py:make_graph"

}

}Add LangChain tools to a FastMCP server

Use to_fastmcp to convert LangChain tools to FastMCP, and then add them to the FastMCP server via the initializer:

[!NOTE]

toolsargument is only available in FastMCP as ofmcp >= 1.9.1

from langchain_core.tools import tool

from langchain_mcp_adapters.tools import to_fastmcp

from mcp.server.fastmcp import FastMCP

@tool

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

fastmcp_tool = to_fastmcp(add)

mcp = FastMCP("Math", tools=[fastmcp_tool])

mcp.run(transport="stdio")

DevTools Supporting MCP

The following are the main code editors that support the Model Context Protocol. Click the link to visit the official website for more information.