- Explore MCP Servers

- llm-mcp-rag

Llm Mcp Rag

What is Llm Mcp Rag

llm-mcp-rag is an augmented language model framework that integrates multiple MCP servers and utilizes Retrieval-Augmented Generation (RAG) to enhance the capabilities of language models without relying on external frameworks like LangChain or LlamaIndex.

Use cases

Use cases for llm-mcp-rag include summarizing web pages, querying local documents for relevant information, and generating context-aware responses in chat applications.

How to use

To use llm-mcp-rag, set up the MCP servers according to your requirements, configure the system prompts, and invoke the model with the desired prompts to retrieve and generate contextually relevant information.

Key features

Key features of llm-mcp-rag include support for multiple MCP servers, the ability to retrieve information from knowledge sources and inject it into the context, and a simplified RAG implementation that enhances the model’s performance.

Where to use

llm-mcp-rag can be used in various fields such as natural language processing, information retrieval, content summarization, and any application requiring enhanced conversational agents.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Overview

What is Llm Mcp Rag

llm-mcp-rag is an augmented language model framework that integrates multiple MCP servers and utilizes Retrieval-Augmented Generation (RAG) to enhance the capabilities of language models without relying on external frameworks like LangChain or LlamaIndex.

Use cases

Use cases for llm-mcp-rag include summarizing web pages, querying local documents for relevant information, and generating context-aware responses in chat applications.

How to use

To use llm-mcp-rag, set up the MCP servers according to your requirements, configure the system prompts, and invoke the model with the desired prompts to retrieve and generate contextually relevant information.

Key features

Key features of llm-mcp-rag include support for multiple MCP servers, the ability to retrieve information from knowledge sources and inject it into the context, and a simplified RAG implementation that enhances the model’s performance.

Where to use

llm-mcp-rag can be used in various fields such as natural language processing, information retrieval, content summarization, and any application requiring enhanced conversational agents.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Content

[!INFO]

This repo is a fork of KelvinQiu802’s llm-mcp-rag. The new branch dev is used for self-learning.

LLM + MCP + RAG

目标

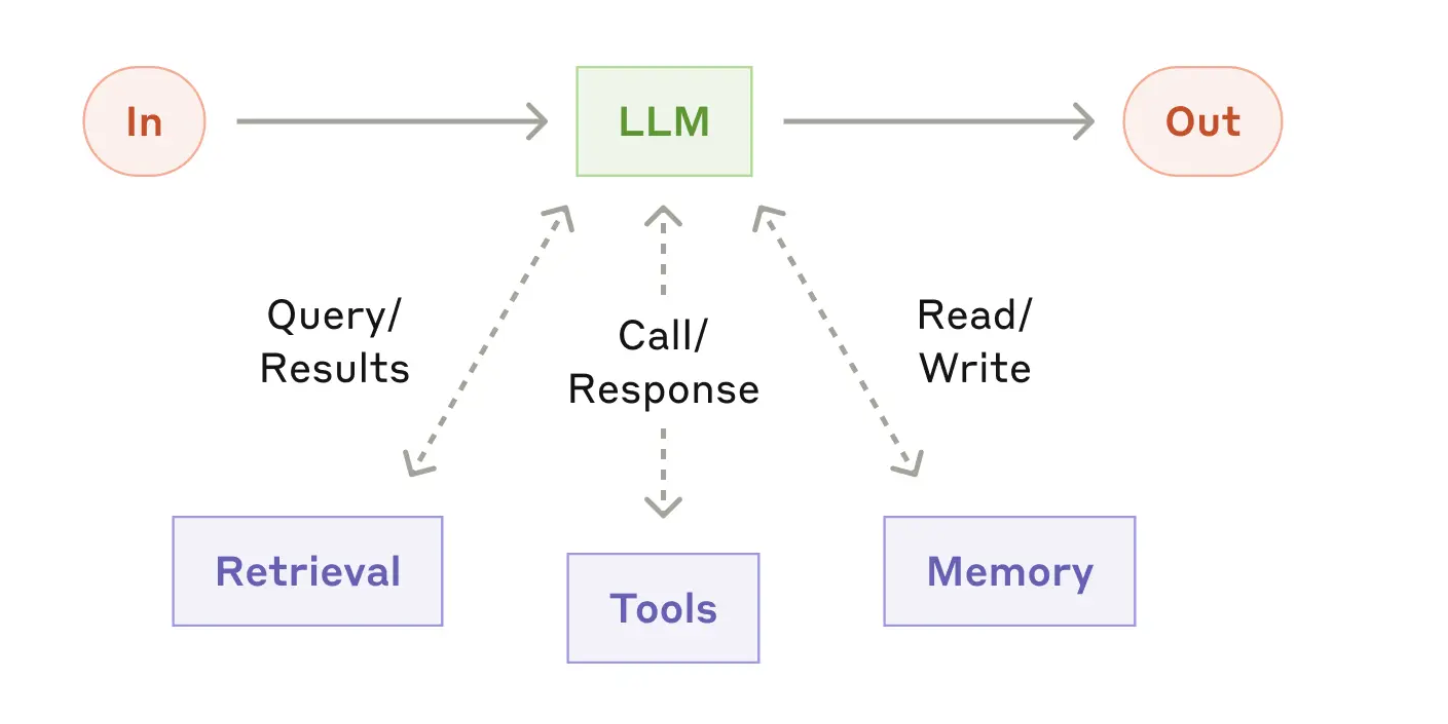

- Augmented LLM (Chat + MCP + RAG)

- 不依赖框架

- LangChain, LlamaIndex, CrewAI, AutoGen

- MCP

- 支持配置多个MCP Serves

- RAG 极度简化板

- 从知识中检索出有关信息,注入到上下文

- 任务

- 阅读网页 → 整理一份总结 → 保存到文件

- 本地文档 → 查询相关资料 → 注入上下文

The augmented LLM

classDiagram class Agent { +init() +close() +invoke(prompt: string) -mcpClients: MCPClient[] -llm: ChatOpenAI -model: string -systemPrompt: string -context: string } class ChatOpenAI { +chat(prompt?: string) +appendToolResult(toolCallId: string, toolOutput: string) -llm: OpenAI -model: string -messages: OpenAI.Chat.ChatCompletionMessageParam[] -tools: Tool[] } class EmbeddingRetriever { +embedDocument(document: string) +embedQuery(query: string) +retrieve(query: string, topK: number) -embeddingModel: string -vectorStore: VectorStore } class MCPClient { +init() +close() +getTools() +callTool(name: string, params: Record<string, any>) -mcp: Client -command: string -args: string[] -transport: StdioClientTransport -tools: Tool[] } class VectorStore { +addEmbedding(embedding: number[], document: string) +search(queryEmbedding: number[], topK: number) -vectorStore: VectorStoreItem[] } class VectorStoreItem { -embedding: number[] -document: string } Agent --> MCPClient : uses Agent --> ChatOpenAI : interacts with ChatOpenAI --> ToolCall : manages EmbeddingRetriever --> VectorStore : uses VectorStore --> VectorStoreItem : contains

依赖

git clone [email protected]:KelvinQiu802/ts-node-esm-template.git

pnpm install

pnpm add dotenv openai @modelcontextprotocol/sdk chalk**

LLM

MCP

RAG

- Retrieval Augmented Generation

- 各种Loaders: https://python.langchain.com/docs/integrations/document_loaders/

- 硅基流动

- 邀请码: x771DtAF

- json数据

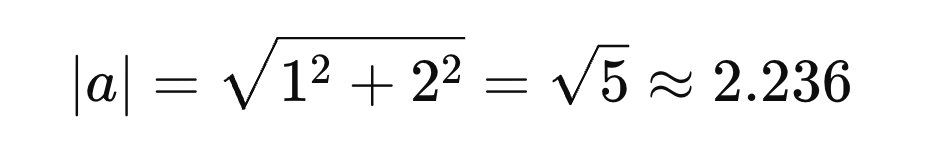

向量

- 维度

- 模长

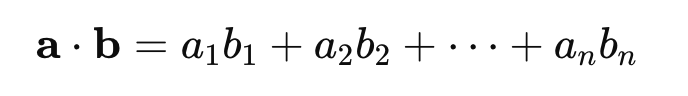

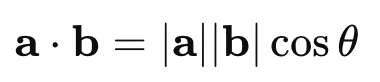

- 点乘 Dot Product

- 对应位置元素的积,求和

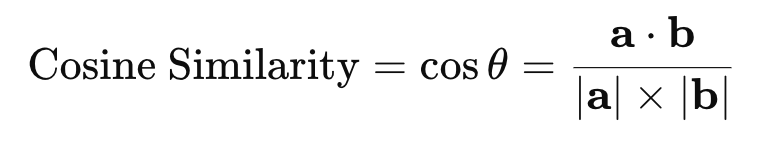

- 余弦相似度 cos

- 1 → 方向完全一致

- 0 → 垂直

- -1 → 完全想法

Dev Tools Supporting MCP

The following are the main code editors that support the Model Context Protocol. Click the link to visit the official website for more information.