- Explore MCP Servers

- mcp-execution-engine

Mcp Execution Engine

What is Mcp Execution Engine

The mcp-execution-engine is a modular and lightweight execution engine designed for AI agents, automation pipelines, and multi-step tool workflows. It allows for dynamic execution of multiple tools in sequence and supports various interaction methods including CLI, RESTful API, and Telegram Bot, all within a single Docker image.

Use cases

Use cases for the mcp-execution-engine include automating workflows for AI agents, integrating multiple tools in a sequence for complex tasks, and providing a unified interface for various interaction methods in automation pipelines.

How to use

To use the mcp-execution-engine, first install Docker, then pull the Docker image using ‘docker pull sidwoong/xxx-mcp-execution-engine’. You can start it in CLI mode or API server mode by running the appropriate Docker commands with your environment configuration.

Key features

Key features of the mcp-execution-engine include dynamic execution of tool chains, interaction via CLI, HTTP API, or Telegram, support for custom tools through a modular system, and Docker-native deployment with no local dependencies.

Where to use

The mcp-execution-engine is suitable for backend execution engines, plugin-based AI systems, and hybrid AI workflows that require both on-chain and off-chain processing.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Overview

What is Mcp Execution Engine

The mcp-execution-engine is a modular and lightweight execution engine designed for AI agents, automation pipelines, and multi-step tool workflows. It allows for dynamic execution of multiple tools in sequence and supports various interaction methods including CLI, RESTful API, and Telegram Bot, all within a single Docker image.

Use cases

Use cases for the mcp-execution-engine include automating workflows for AI agents, integrating multiple tools in a sequence for complex tasks, and providing a unified interface for various interaction methods in automation pipelines.

How to use

To use the mcp-execution-engine, first install Docker, then pull the Docker image using ‘docker pull sidwoong/xxx-mcp-execution-engine’. You can start it in CLI mode or API server mode by running the appropriate Docker commands with your environment configuration.

Key features

Key features of the mcp-execution-engine include dynamic execution of tool chains, interaction via CLI, HTTP API, or Telegram, support for custom tools through a modular system, and Docker-native deployment with no local dependencies.

Where to use

The mcp-execution-engine is suitable for backend execution engines, plugin-based AI systems, and hybrid AI workflows that require both on-chain and off-chain processing.

Clients Supporting MCP

The following are the main client software that supports the Model Context Protocol. Click the link to visit the official website for more information.

Content

🧠 XXX MCP Execution Engine

A modular, lightweight ToolCall execution engine designed for AI agents, automation pipelines, and multi-step tool workflows. It supports CLI interaction, RESTful API service, and Telegram Bot access — all packaged in a single Docker image.

✨ Product Overview

XXX MCP Execution Engine provides a unified runtime for structured ToolCall chains. It allows LLM agents or users to:

- 🔧 Dynamically execute multiple tools in sequence

- 💬 Interact via terminal, HTTP API, or Telegram

- 📦 Plug in custom tools via the modular

tools/system - 🧱 Use anywhere via Docker — no manual setup required

This project is inspired by the idea of decoupling agent planning from tool execution, making it perfect for backend execution engines, plugin-based AI systems, or on-chain/off-chain hybrid AI workflows.

✅ Built-in modes: CLI / API Server / Telegram Bot

🐳 Docker-native, zero local dependency

🔌 Supports future extensibility with user-defined tools

🐳 How to Install Docker (for Beginners)

macOS / Windows / Linux

-

Download from the official Docker site:

👉 https://www.docker.com/products/docker-desktop -

Follow the installation steps.

-

After installation, run:

docker --version

✅ If you see version output, Docker is installed.

⚙️ Environment Configuration (.env)

⚙️ Environment Configuration (.env)

MCP_LANG=ZH OPENAI_API_KEY=sk-xxxxxxxxxx WALLET_ADDRESS=0xxxxxxxxxxx

| Variable | Description | Required |

|---|---|---|

MCP_LANG |

Language: EN or ZH |

✅ Yes |

OPENAI_API_KEY |

OpenAI API Key | ✅ Yes |

WALLET_ADDRESS |

Wallet address | ✅ Yes |

🚀 Quick Start

Pull the Docker Image

docker pull sidwoong/xxx-mcp-execution-engine

Start CLI Mode

docker run --rm -it --env-file .env sidwoong/xxx-mcp-execution-engine start cli

API Server Mode

docker run --rm -it --env-file .env -p 8080:8080 sidwoong/xxx-mcp-execution-engine start server

Telegram Bot Mode

docker run --rm -it --env-file .env sidwoong/xxx-mcp-execution-engine start telegram-bot

🧩 Features

| Module | Description |

|---|---|

| 🧠 CLI | Interactive terminal tool execution |

| 🌐 API Server | FastAPI-powered tool executor endpoint |

| 🤖 Telegram | Quickly build your own private assistant via Bot |

| 🔌 Tools | Auto-loads Python modules from tools/ directory |

🔍 Preview

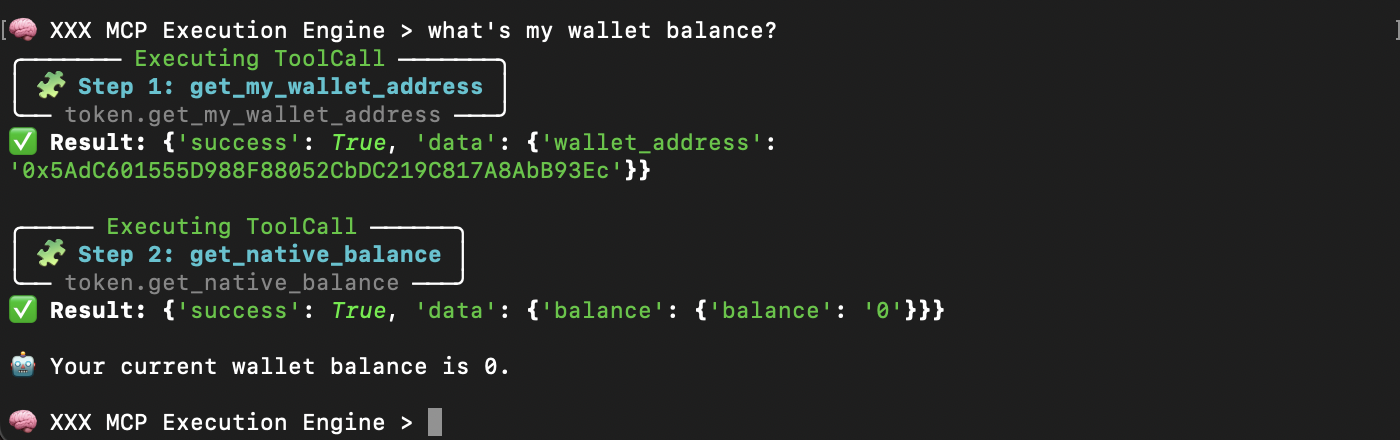

🧠 CLI Mode (start cli)

Launch interactive execution in terminal.

Interactively execute ToolCall chains step-by-step.

Each tool call is clearly displayed with execution flow, tool name, and results.

🌐 API Server Mode (start server)

Run as an HTTP API service using FastAPI.

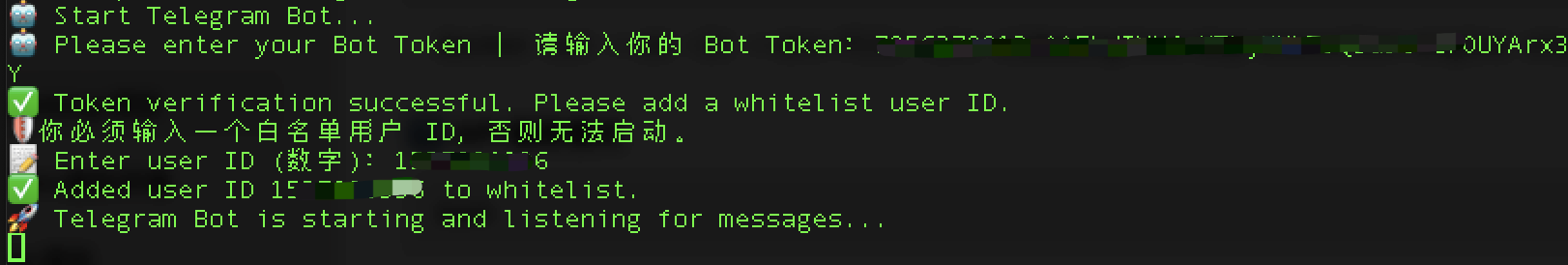

🤖 Telegram Mode (start telegram-bot)

Turn your bot into a private assistant.

Easily turn MCP into your personal assistant inside Telegram.

Users can chat naturally and trigger multi-step ToolCall executions.

📮 Contact

Author: Sid Woong

GitHub: SidWoong

X (Twitter): @SidWoong

✅ Project is actively maintained. Content continuously updated. For any questions, feel free to contact author on X: @SidWoong

Dev Tools Supporting MCP

The following are the main code editors that support the Model Context Protocol. Click the link to visit the official website for more information.